My old-man-playing-with-minis hobby has metastasized in a weird way. Over a year after recapping my stumblebum attempts at creating character artwork with digital tools, I’m going back to the well, because I’ve made leaps and bounds with additional tools. The first post covered some seemingly-slapdash Photoshop sessions, then slightly more refined HeroForge work, but during 2022 I began using AI art apps like Wombo’s Dream and Midjourney V3 and V4 as a base, retouching those apps’ results to create much better representations of my characters and the places they live.

These pieces are (and will remain) personal work, so I think a history-and-process piece might helpfully illustrate how much effort actually goes into creating viable imagery with these tools. It’s not simply “type in a prompt and get a perfect image of just what you asked for,” so I had to rely on my decades of professional creativity to refine what I get into something that feels presentable.

My Dream Died Young, Midge

I’ve never been the most with-it person when it comes to creative trends, and at this point by the time I “discover” something it’s usually over. The first time I noticed “AI Art” was at the end of 2021, when one of my map design clients (a sci-fi/fantasy author) posted some of the mood boards she’d created with the Wombo “Dream” app. I got excited about making visual representations of the fictional places I’d created for my custom role-playing maps, so I jumped in—and found out how lacking that medium still was for producing anything better than very impressionistic (let alone Impressionistic) visual wallpaper.

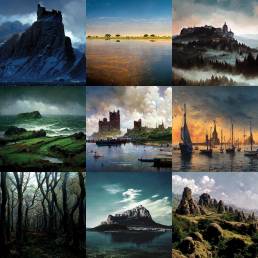

Above: Landscapes from Wombo’s “Dream” (the 9 on the left) vs Midjourney V4 (the 9 on the right).

Dream was fun for a few weeks, and I did make a few images that seemed compelling, even using them as brief visual aids during D&D sessions. However, I eventually lost interest because the next step I wanted to take—character artwork—was laughably beyond what Dream could do, so I let it go for several months. Then later that summer, an ex-colleague tweeted about what he was creating with DALL•E and Midjourney, which piqued my interest again, so I tried the latter. Midjourney’s interface (via the digital firehose of Discord) and process (increasingly complex text prompts, a monthly subscription fee) were high barriers to entry for me, but “Midge,” as some users call it, got easier with practice.

By August 2022 I was creating landscapes in Midjourney v3 to illustrate my fictional places, but the process was laborious. Each “final” piece usually resulted from multiple “re-rolls” (successive variations) of my original text prompts—basically mud-flinging sessions of both generic (“medieval harbor town”) and specific (“Welsh port settlement on the Menai Straits”) words to make a usable result. And even then, I still had to do substantial retouching work on all of those results to make something presentable. I did the extra work, though, because it helped refine an image into what I wanted—making it more “mine” and less “a computer-generated mashup of stuff scraped from all over the internet.”

Portrait of an Adventurer as a Shambling Golem

Visualizing fictional places seemed to be working well enough, so in September 2022 I tried to do the same with my characters. I wasn’t optimistic, because neither my previous tools nor new competing AI tools had been truly up to the task—and for several weeks Midjourney proved no different. The app’s then-current version, 3, required way too many iterations (re-rolls, “upscales,” and “remixes”) to even come close to representations that resembled real people. My text prompts probably weren’t optimal, and that meant that I had to refine the Midjourney v3 results with other tools—usually a combination of Photoshop, FaceApp, PS Express, and Baseten’s GPF-GAN photo restoration interface—to ultimately barely match the character’s appearance in my mind.

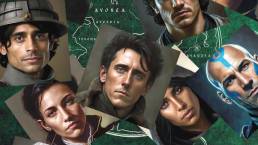

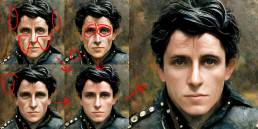

How much work? I didn’t actually track the hours—my personal projects don’t get the same discipline as freelance work—so let’s use a few characters as examples—starting with an old standby, a human bard named Cannor. His graphical journey was long and weird and would undoubtedly shatter his vain sense of self, but it was typical of the other (fifteen and counting) people I brought to visual life. Using the aforementioned tools, I took v3 Cannor from a shambling golem resembling John Kerry’s decaying corpse to a passable performer. In Photoshop, I combined pieces from four different re-rolled MJv3 upscales (hair from one, eyes from another, nose from a third, etc.), then smoothed out his hideous visage with Baseten and Faceapp before re-combining that result (a weird airbrushed teenager) with the original MJv3 montage to get this poor guy closest to his actual age of (he’s not old, he’s) 37.

The process became even more convoluted when I tried creating non-human characters, but since I rarely play those, I’m fine with these folks looking more human than not. Another example is Qiranna, an elf warlock. She wouldn’t care about looking weird, but she’s still got self-respect, so her process involved exponentially more re-rolls, upscales, and remixes (I made way too many unused v3 elf attempts) before landing on someone that looked like her. Even then, I still had lots of retouching to do, just like Cannor—for eyes, hair, nose, ears, the works—to help Qiranna resemble a real person (or rather, a painting of a real person). Midjourney users call this a “paint-over,” but it’s really just degrees of retouching. To reiterate: Midjourney v3 alone was not an optimal process for creating character artwork, because almost every result was irreparably flawed and the app couldn’t handle details. I had to settle for close-cropped low-resolution portraits of my characters, but those are fine for simple avatars, and better than what I’d used for years before.

Re-Issue, Re-Package, Re-Evaluate

I’d accepted that painting these vulgar pictures was the best I could do until about a month ago, when Midjourney released version 4. That update contained the ability to add image URLs to each text prompt, allowing further refinement of anything created via, say, version 3. Its rollout surprised me because I rarely check the app’s Discord threads (which, like everything on Discord, feels like 24/7 chaos to me), so I jumped back in to do remedial work for each of the characters made with v3. The combination of text prompts (sometimes existing, sometimes new) and my previous images worked great, mostly because MJv4 handled the basics much better than v3.

However, I still had to substantially retouch each promising-looking image to get something resembling the character in my head—this time with ever-more-specific detail work. Ironically, in MJv4 the low-res images are better quality than anything upscaled or remixed, and even then most results are 95% unusable. My character portraits only got close to “done” when I tackled detail work like eyeballs, nostrils, stray hairs, and other random AI-generated remnants here and there. The bot still can’t render hands or fingers well at all, so I quickly abandoned potential full- or half-body poses with characters holding anything. At this point, MJv4 is still simply a base for creating better work over it; the bot can mostly get the simple stuff well enough, like proportions, anatomy, etc. (though some necks still stretch a bit long, for example), but not dependably every time.

My work with the two characters mentioned above shows this pretty well. I based v4 Cannor on the v3 portrait, but (after choosing from about 50 re-rolls) he still needed a haircut, a weird adjustment for some strangely-tiny eyeballs, and custom tailoring for a cloak oddly fused with his leather armor. Qiranna needed even more work, because her purple face-paint got rendered as actual anatomical features, so I had to merge different re-rolls in Photoshop to fix that, smooth out my smash-grab skin edits with GPF-GAN, then age her a bit in Faceapp (which unfortunately also gave her cross-eyes). Another re-roll in MJ helped a lot, but that result still needed plenty of cosmetic edits: eye color, lip color, purple face-paint, grayer skin, a second pointed ear, and snipping stray hairs.

Several other characters needed dozens of hours’ worth of work too, as shown below: the final portrait of a second human named Zafraia resulted from a heavily-edited montage of three separate MJ renderings, first to combine one face with a different head on a cropped headshot, then to take that face/head combo and graft it onto a wider-cropped torso before taking roughly six more steps to edit her ponytail, hair, nose, leather coat, and epaulettes. Even when the initial MJv4 render process goes as well as it can, as with a second elf named Farago, it sill involves many re-roll variations and lots of cosmetics (in this case ears, eyeballs, a strawberry birthmark, and a few decades of elf-aging) in multiple additional apps to work for me.

It’s Personal, Except When It’s Not

Everything else I’d say about Midjourney’s value boils down to “inconclusive” or “unproven.” Any verdict I might reach on AI art generally or Midjourney specifically is wrapped up in its worth to me as one tool among many for use in personal creative projects. I don’t know how much I’ll keep using it—the subscription isn’t prohibitive, but it definitely has potential for predatory abuse on folks who can’t resist clicking a button for additional “fast hours” (the rendering time for each image, allotted monthly per subscription level).

I’ve tried to stay away from the raging, corrosive debate about AI art’s ethics or lack thereof because I believe the topic is more complex than either its advocates or detractors claim. However, a reckoning feels unavoidable because, like everything else ever culturally litigated on social media, this topic’s been reduced to competing escalating explosions of reactionary outrage, and I’m definitely allergic to that discourse. So because I value my own sanity, I’m keeping my use of AI art to personal projects (like custom character sheets) and, as mentioned here, I won’t use it on client work.

I assume it’s only a matter of time before my map-artwork process becomes as commodified as any other design medium I’ve worked with over the decades, so I’m not afraid of potential ramifications AI art may have on what I do. Right now, based on my own use of it, I can definitively say it’s absolutely not a shortcut to compelling portrait or landscape artwork. It’s not a push-button solution, yet—but I’m also not worried that using it invalidates my creative career or makes me any less of a creative person. If doing all that retouching and thought-work isn’t art or creativity, then I don’t know what is.